IOT- Chapter 12: IoT Data Management and Analytics

Chapter 12: IoT Data Management and Analytics

I. The IoT Data Lifecycle: From Sensing to Insight

Before delving into specifics, it's crucial to understand the journey of data through an IoT system. This lifecycle provides context for all subsequent steps.

1. Data Collection (Perception Layer): Sensors gather raw data from the physical world.

2. Data Transmission (Network Layer): Data is sent from devices to gateways and then to the cloud (or directly to cloud if device supports it) using various protocols.

3. Data Ingestion: Securely and reliably receiving data at scale into the cloud platform.

4. Data Pre-processing/Transformation: Cleaning, filtering, validating, and enriching the raw data. This often happens both at the edge (gateway) and in the cloud.

5. Data Storage: Persisting the processed and raw data in appropriate databases or storage systems.

6. Data Processing and Analytics: Applying algorithms to the data to derive insights, detect patterns, predict events, or trigger actions. This can be real-time (stream processing) or historical (batch processing).

7. Data Visualization and Application: Presenting insights to users through dashboards, reports, and integrating data into business applications.

8. Action/Feedback Loop: Based on insights, actions are triggered (e.g., control actuators, send alerts, update business systems).

II. Data Ingestion: The Gateway to the Cloud

This is the entry point for all IoT data into the cloud infrastructure.

A. Scalability and Reliability: IoT ingestion services must handle millions of concurrent connections and billions of messages reliably, without dropping data.

B. Security: Authentication (verifying device identity) and authorization (what data a device can send/receive) are paramount at this stage. Data encryption (TLS/SSL) during transit is mandatory.

C. Protocols for Ingestion:

MQTT: Most common for device-to-cloud due to its lightweight nature, QoS levels, and pub/sub model. Messages are routed to specific services based on topics.

HTTPS: Used by gateways or more capable devices for RESTful API calls to send data, especially for larger payloads or less frequent updates.

AMQP (Advanced Message Queuing Protocol): Another open standard for message-oriented middleware, often used in enterprise messaging systems, can be used for IoT.

D. Cloud Ingestion Services:

AWS IoT Core: Manages connectivity, device registry, and rules engine for routing messages.

Azure IoT Hub: Provides secure bi-directional communication, device management, and message routing.

Google Cloud Pub/Sub: A robust, scalable messaging service that acts as an ingestion point, though not IoT-specific, it's widely used for IoT.

III. Data Pre-processing and Transformation

Raw sensor data is often messy and needs significant refinement before it can be truly useful. This happens at the edge (gateway) and/or in the cloud.

A. Why Pre-process?

Reduce Noise: Filter out spurious readings or sensor errors.

Reduce Volume: Minimize the amount of data sent to storage/analytics engines (cost and performance).

Normalize Data: Convert different units, calibrate sensor readings, align data types.

Enrich Data: Add contextual information (e.g., device ID, location, timestamp, associated asset information from an ERP system).

Format Data: Convert to a consistent structure (e.g., JSON, Avro, Protobuf).

B. Common Pre-processing Techniques:

Filtering: Removing duplicate readings, values outside a valid range, or insignificant changes.

Aggregation: Summarizing data over time (e.g., calculating average temperature every 5 minutes from second-by-second readings).

Sampling: Taking readings at specific intervals, rather than continuously.

Deduplication: Removing identical messages received due to network retries.

Normalization/Scaling: Adjusting data to a common range or distribution for machine learning.

Contextualization: Joining sensor data with master data from enterprise systems (e.g., linking a device ID to its installation location, last maintenance date, or associated customer).

IV. Data Storage Strategies for IoT

Choosing the right database is crucial for performance, scalability, and cost efficiency. IoT data often has unique characteristics (time-series, high volume).

A. Time-Series Databases (TSDBs):

Characteristics: Optimized for storing and querying time-stamped data points (e.g., sensor readings over time). Excellent for rapid writes and range queries (e.g., "show me temperature for last 24 hours").

Examples: InfluxDB, TimescaleDB, AWS Timestream, Azure Data Explorer, Google Cloud Bigtable (can be used for TS).

Use Cases: Most common for raw and aggregated sensor data.

B. NoSQL Databases:

Characteristics: Non-relational, flexible schema, highly scalable for large volumes of unstructured or semi-structured data.

Types:

Key-Value Stores: Redis, DynamoDB (AWS), Azure Cosmos DB (table API).

Document Databases: MongoDB, Azure Cosmos DB (document API).

Wide-Column Stores: Cassandra, Google Cloud Bigtable.

Use Cases: Device metadata, user profiles, sensor data with varying attributes, caching.

C. Relational Databases (SQL):

Characteristics: Structured data, strong consistency, uses SQL for querying. Good for complex relationships.

Examples: PostgreSQL, MySQL, SQL Server, Oracle.

Use Cases: Device registries, configuration data, user management, small-to-medium scale aggregated data, integration with traditional business applications. Less ideal for raw, high-volume sensor data.

D. Object Storage / Data Lakes:

Characteristics: Extremely scalable, highly durable, cost-effective storage for vast amounts of unstructured or semi-structured data (raw data, images, video, logs).

Examples: AWS S3, Azure Blob Storage, Google Cloud Storage.

Use Cases: Long-term archival of raw IoT data, source for batch analytics and machine learning training, storing firmware updates.

V. Real-time vs. Batch Processing for IoT Analytics

Different analytical approaches are required depending on the latency and data volume needs.

A. Real-time (Stream) Processing:

Purpose: Analyze data as it arrives, enabling immediate insights and actions. Low latency is critical.

How it Works: Data streams are continuously processed by stream processing engines. Rules are applied, anomalies are detected, and immediate alerts or actions are triggered.

Examples:

Cloud Services: AWS Kinesis (Data Streams, Firehose, Analytics), Azure Stream Analytics, Google Cloud Dataflow (stream processing mode).

Open Source: Apache Kafka Streams, Apache Flink, Apache Spark Streaming.

Use Cases:

Anomaly Detection: Instantly identify equipment malfunction, security breaches, or unusual environmental conditions.

Real-time Monitoring & Alerting: Triggering SMS/email/app notifications when thresholds are crossed.

Predictive Maintenance (Initial Alerts): Sending immediate alerts based on streaming data patterns.

Real-time Dashboards: Displaying live updates of sensor values.

B. Batch Processing:

Purpose: Analyze large volumes of historical data to uncover deeper patterns, trends, and long-term insights. Higher latency is acceptable.

How it Works: Data is collected over a period (hours, days, weeks) and then processed in large chunks. Ideal for complex statistical analysis and machine learning model training.

Examples:

Cloud Services: AWS Glue, Azure Data Factory, Google Cloud Dataflow (batch processing mode), Dataproc.

Open Source: Apache Spark (batch), Hadoop MapReduce.

Use Cases:

Long-term Trend Analysis: Identifying seasonal patterns in energy consumption or environmental data.

Predictive Modeling: Training machine learning models using historical data for more accurate predictions (e.g., predicting equipment lifespan).

Root Cause Analysis: Investigating the causes of past failures.

Reporting and Business Intelligence: Generating daily, weekly, or monthly reports on operational efficiency, resource utilization, etc.

VI. Basic Data Visualization for IoT

Making sense of vast amounts of data requires effective visual representation.

A. Importance:

Understanding Trends: Easier to spot patterns and anomalies.

Decision Support: Provides clear, concise information for quick decision-making.

Monitoring: Real-time dashboards enable continuous oversight of systems.

B. Common Visualization Types for IoT Data:

Line Charts: Ideal for time-series data (temperature over time, energy consumption).

Gauge Charts: Displaying current status against a range (e.g., fluid level, pressure).

Bar Charts: Comparing discrete values (e.g., device uptime, error counts per device).

Heatmaps: Showing distribution or intensity of a variable over space or time (e.g., temperature across a room, activity intensity).

Geospatial Maps: Displaying device locations, movement tracks, or geographically distributed sensor data.

C. Tools for IoT Visualization:

Cloud-Native Dashboards: Built-in dashboards provided by IoT platforms (e.g., AWS IoT Analytics dashboards, Azure IoT Central).

Business Intelligence (BI) Tools: Tableau, Power BI, Google Data Studio for more complex reporting and integration with other data sources.

Open Source Tools: Grafana (highly popular for time-series data), Kibana.

Custom Web/Mobile Applications: Building bespoke interfaces using web frameworks (React, Angular, Vue.js) or mobile development tools.

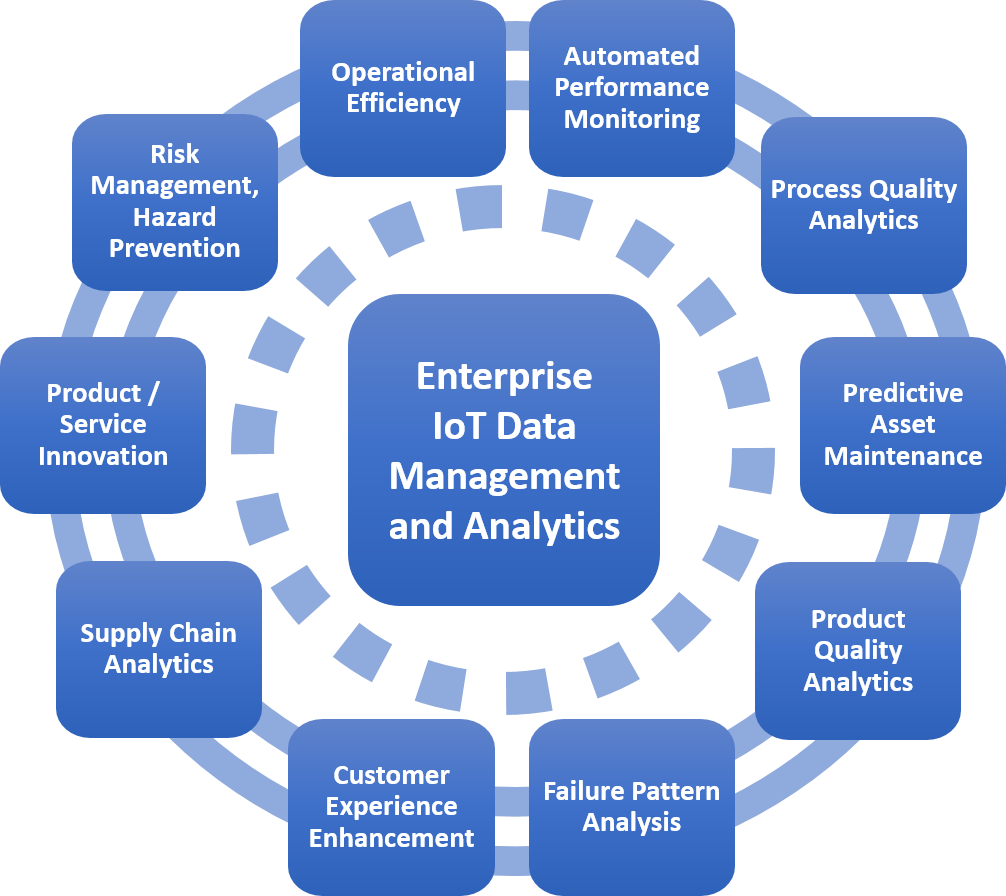

VII. Introduction to IoT Analytics: From Data to Value

IoT analytics goes beyond simple data display, focusing on extracting actionable intelligence.

A. Descriptive Analytics ("What happened?"):

Purpose: Summarizing past events and current states.

Examples: Current temperature reading, average energy consumption last month, number of devices online.

B. Diagnostic Analytics ("Why did it happen?"):

Purpose: Investigating the causes of past events or performance.

Examples: Analyzing historical data to determine why a machine failed, identifying the source of a network anomaly.

C. Predictive Analytics ("What will happen?"):

Purpose: Using historical data and statistical models (often machine learning) to forecast future outcomes or probabilities.

Examples: Predicting when a machine will likely fail (predictive maintenance), forecasting energy demand, predicting weather patterns.

D. Prescriptive Analytics ("What should I do?"):

Purpose: Recommending specific actions to take to achieve desired outcomes, often based on predictive models.

Examples: Suggesting optimal maintenance schedules, recommending adjustments to HVAC systems for energy efficiency, autonomously re-routing traffic based on predicted congestion.

Chapter 12 is fundamental to realizing the business value of IoT. It details the journey of data from raw bits to actionable intelligence, covering critical steps like ingestion, pre-processing, storage, and the various analytical approaches. This knowledge empowers learners to design IoT solutions that don't just collect data, but effectively leverage it to drive informed decisions and automated actions.